How to rate Inconsistency

The text below is taken from the GRADE workinggroup official JCE series, article number 7:

GRADE guidelines: 7. Rating the quality of evidence—inconsistency

Large inconsistency demands a search for an explanation

Systematic review authors should be prepared to face inconsistency in the results. In the early (protocol) stages of their review, they should consider the diversity of patients, interventions, outcomes that may be appropriate to include. Reviewers should combine results only if, across the range of patients, interventions, and outcomes considered, it is plausible that the underlying magnitude of treatment effect is similar [7]. This decision is a matter of judgment. In general, we suggest beginning by pooling widely, and then testing whether the assumption of similar effects across studies holds. This approach necessitates generating a priori hypotheses regarding possible explanations of variability of results.

If systematic review authors find that the magnitude of intervention effects differs across studies, explanations may lie in the population (e.g., disease severity), the interventions (e.g., doses, cointerventions, comparison interventions), the outcomes (e.g., duration of follow-up), or the study methods (e.g., randomized trials with higher and lower risk of bias). If one of the first three categories provides the explanation, review authors should offer different estimates across patient groups, interventions, or outcomes. Guideline panelists are then likely to offer different recommendations for different patient groups and interventions. If study methods provide a compelling explanation for differences in results between studies, then authors should consider focusing on effect estimates from studies with a lower risk of bias.

If large variability (often referred to as heterogeneity) in magnitude of effect remains unexplained, the quality of evidence decreases. In this article, we provide guidance concerning how to judge whether inconsistency in results is sufficient to rate down the quality of evidence, and when to believe apparent explanations of inconsistency (subgroup analyses).

Four criteria for assessing inconsistency in results

Reviewers should consider rating down for inconsistency when

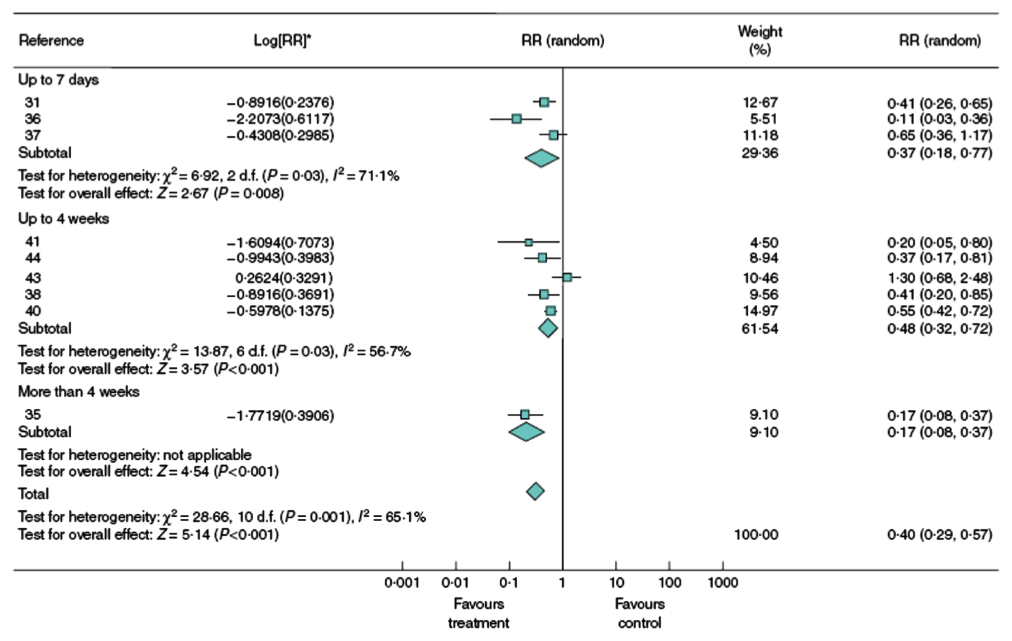

1.Point estimates vary widely across studies;

2.Confidence intervals (CIs) show minimal or no overlap;

3.The statistical test for heterogeneity—which tests the null hypothesis that all studies in a meta-analysis have the same underlying magnitude of effect—shows a low P-value;

4.The I2—which quantifies the proportion of the variation in point estimates due to among-study differences—is large.

One may ask: what is a large I2? One set of criteria would say that an I2 of less than 40% is low, 30–60% may be moderate, 50–90% may be substantial, and 75–100% is considerable [8]. Note the overlapping ranges, and the equivocation (“may be”): an implicit acknowledgment that the thresholds are both arbitrary and uncertain.

Furthermore, although it does not—in contrast to tests for heterogeneity—depend on the number of studies, I2 shares limitations traditionally associated with tests for heterogeneity. When individual study sample sizes are small, point estimates may vary substantially but, because variation may be explained by chance, I2 may be low. Conversely, when study sample size is large, a relatively small difference in point estimates can yield a large I2 [9]. Another statistic, τ2 (tau square) is a measure of the variability that has an advantage over other measures in that it is not dependent on sample size [9]. So far, however, it has not seen much use. All statistical approaches have limitations, and their results should be seen in the context of a subjective examination of the variability in point estimates and the overlap in CIs."

Go to the orginal article for full text, or go to a specific chapter in the article:

This article deals with binary/dichotomous outcomes, and inconsistency in relative, not absolute, measures of effect

See the Assessing Inconsistency Training video from McMaster CE&B GRADE site: http://cebgrade.mcmaster.ca/Inconsistency/index.html