How to rate the Quality of Evidence (your confidence in the effect estimate)

________ The 4 levels of Certainty in effect estimates / Quality of evidence _________

High: We are very confident in the evidence supporting the recommendation.

Further research is very unlikely to change the estimates of effect.

Moderate: We are moderately confident in the evidence supporting the recommendation.

Further research could have an important impact, which may change the estimates of effect.

Low: We have only low confidence in the evidence supporting the recommendation.

Further research is very likely to have an important impact, which is likely to change the estimate of effect.

Very low: Any estimate of effect is very uncertain.

_______________________________________________________________________

See this topic in the GRADE handbook: Quality of evidence

GRADE guidelines: 3. Rating the quality of evidence (The GRADE workinggroup official JCE series)

"The optimal application of GRADE requires systematic reviews of the impact of alternative management approaches on all patient-important outcomes [1]. In the context of a systematic review, the ratings of the quality of evidence reflect the extent of our confidence that the estimates of the effect are correct. In the context of making recommendations, the quality ratings reflect the extent of our confidence that the estimates of an effect are adequate to support a particular decision or recommendation."

Go to the orginal article for full text, or go to a specific chapter in the article:

What we do not mean by quality of evidence

Opinion is not evidence

A particular quality of evidence does not necessarily imply a particular strength of recommendation

So what do we mean by “quality of evidence”?

Quality in GRADE means more than risk of bias

GRADE specifies four categories for the quality of a body of evidence

Arriving at a quality rating

Rationale for using GRADE’s definition of quality

From the GRADE workinggroup FAQ site:

High quality/confidence: Further research is very unlikely to change our confidence in the estimate of effect.

Moderate quality/confidence: Further research is likely to have an important impact on our confidence in the estimate of effect and may change the estimate.

Low quality/confidence: Further research is very likely to have an important impact on our confidence in the estimate of effect and is likely to change the estimate.

Very low quality/confidence: We are very uncertain about the estimate.

_________________

Methods Commentary: Rating Confidence in Estimates of Effect

Introduction

Systematic review authors are familiar with assessing risk of bias in individual studies. They are less familiar with assessing the extent of risk of bias on an outcome-by-outcome basis, and much less familiar with assessing the broader concept of confidence in estimates of effect (otherwise known as quality of evidence) across an entire body of evidence. This commentary introduces these issues in the context of systematic reviews of alternative management strategies – it is only indirectly applicable to systematic reviews of prognostic studies.

Why you might want to pay attention to this commentary

The most important reasons to attend to this commentary is first, you find its logic compelling, and second, you would like to make your systematic review most useful to your audience. A third, however, is that the concepts we present (initially developed by the GRADE working group, a group of methodologists and guideline developers) have been widely adopted, including by the Cochrane Collaboration and a host of guideline developing organizations including the World Health Organization, the American College of Physicians, the American Thoracic Society, and UpToDate.

To address confidence in estimates of effect, you need to consider more than risk of bias.

In the clinical epidemiological literature, “quality” commonly refers to a judgment on the internal validity (i.e. risk of bias) of an individual study. To arrive at a rating, reviewers of randomized trials consider features such as allocation concealment and blinding. In observational studies they consider appropriate measurement of exposure and outcome, appropriate control of confounding; and in both controlled trials and observational studies they consider loss to follow-up and may consider other aspects of design, conduct, and analysis that influence the risk of bias.

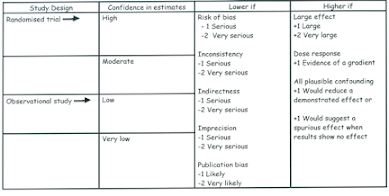

Systematic review authors need, however, to go beyond assessing indivdual studies – they must make judgments about a body of evidence. A body of evidence (for instance, a number of well-designed and executed trials) may be associated with a low risk of bias, but our confidence in effect estimates may be compromised by a number of other factors (imprecision, inconsistency, indirectness, and publication bias). There are also factors, particularly relevant to observational studies, which may lead to rating up quality, including the magnitude of treatment effect and the presence of a dose-response gradient (see figure).

GRADE/Cochrane specifies four categories for the quality of a body of evidence

Although the quality of evidence represents a continuum, the approach suggested by GRADE and adopted by Cochrane results in an assessment of the confidence in a body of evidence as high, moderate, low, or very low. The table below presents an approach to intepreting these four categories.

Significance of the four levels of evidence

High: We are very confident that the true effect lies close to that of the estimate of the effect.

Moderate: We are moderately confident in the effect estimate: The true effect is likely to be close to the estimate of the effect, but there is a possibility that it is substantially different.

Low: Our confidence in the effect estimate is limited: The true effect may be substantially different from the estimate of the effect.

Very low: We have very little confidence in the effect estimate: The true effect is likely to be substantially different from the estimate of effect.

Arriving at a confidence rating

When we speak of evaluating confidence, we are referring to an overall rating for each patient-important outcome across studies. The figure above summarizes our suggested approach to rating confidence in estimates, which begins with the study design (randomized controlled trials or observational studies) and then addresses five reasons to possibly rate down confidence, and three to possibly rate up. A series of articles published in the Journal of Clinical Epidemiology presents detailed guidance regarding how to address each of these issues and arrive at the appropriate judgments1-6.

How ratings of confidence can benefit consumers of systematic reviews

To be useful to decision-makers, clinicians, and patients, systematic reviews must not only provide an estimate of effect for each outcome, but also the information needed to judge whether these estimates are likely to be correct. What information about the studies in a review affects our confidence that the estimate of an effect is correct?

To answer this question, consider an example. Suppose you are told that a recent Cochrane review reported that, in patients with chronic pain, the number needed to treat for clinical success with topical salicylates was 6 compared with placebo. What additional information would you seek to help you decide whether to believe this estimate and how to apply it?

The most obvious questions might be: how many studies were pooled to get this estimate; how many patients did they include; and how wide were the confidence intervals around the effect estimate? Were they randomized controlled trials? Did the studies have important limitations, such as lack of blinding or large or differential loss to follow-up in the compared groups? The questions thus far relate to judgments regarding imprecision and risk of bias.

But there are also other important questions. Is there evidence that more studies of this treatment were conducted, but some were inaccessible to the reviewers? If so, how likely is it that the results of the review reflect the overall experience with this treatment? Did the trials have similar or widely varying results? Was the outcome measured at an appropriate time, or were the studies too short in duration to have much relevance? What part of the body was involved in the interventions (and thus, to what part of the body can we confidently apply these results)? These latter questions refer to categories of publication bias, inconsistency and indirectness. Without answers to (or at least information about) these questions, it is not possible to determine how much confidence to attach to the reported NNT and confidence intervals.

GRADE identified its five categories – risk of bias; imprecision; inconsistency; indirectness; and publication bias – because they address all the issues that bear on confidence in estimates. For any given question, moreover, information about each of these categories is likely to be essential to judge whether the estimate is likely to be correct.

Systematic review authors must rate confidence on an outcome-by-outcome basis.

The necessity to rate confidence on an outcome-by-outcome basis follows from the variability in confidence that regularly occurs across outcomes. For example, a recent evidence summary of the use of low molecular weight heparin versus vitamin K antagonists in major orthopedic surgery found high quality evidence from multiple randomized trials for the outcomes of nonfatal pulmonary embolus and total mortality7. Because of inconsistency (widely varying results across studies) and indirectness (studies measured asymptomatic rather than symptomatic deep venous thrombosis) evidence for symptomatic deep vernous thrombosis warranted only low confidence. A single rating of confidence in estimates across outcomes would therefore be inappropriate.

Conclusion

Every systematic review should provide information about each of the categories noted in the figure above and the associated discussion. Decision-makers, whether they are guideline developers or clinicians, find it difficult to use a systematic review that does not provide this information. Good systematic reviews have commonly emphasized appraisal of the risk of bias (study limitations) using explicit criteria. Often, however, the focus has been on assessments across outcomes for each study rather than on each important outcome across studies, and restricted assessment of confidence to risk of bias. The structure we are suggesting addresses all the key questions that are pertinent to rating confidence in estimates of effect for individual outcomes relevant to a particular question in a consistent, systematic manner.

References

1. Guyatt GH, Oxman AD, Vist G, Kunz R, Brozek J, Alonso-Coello P, et al. GRADE guidelines: 4. Rating the quality of evidence–study limitations (risk of bias). J Clin Epidemiol 2011;64(4):407-15.

2. Guyatt GH, Oxman AD, Montori V, Vist G, Kunz R, Brozek J, et al. GRADE guidelines: 5. Rating the quality of evidence-publication bias. J Clin Epidemiol 2011.

3. Guyatt G, Oxman AD, Kunz R, Brozek J, Alonso-Coello P, Rind D, et al. GRADE guidelines 6. Rating the quality of evidence-imprecision. J Clin Epidemiol 2011.

4. Guyatt GH, Oxman AD, Kunz R, Woodcock J, Brozek J, Helfand M, et al. GRADE guidelines: 7. Rating the quality of evidence-inconsistency. J Clin Epidemiol 2011.

5. Guyatt GH, Oxman AD, Kunz R, Woodcock J, Brozek J, Helfand M, et al. GRADE guidelines: 8. Rating the quality of evidence-indirectness. J Clin Epidemiol 2011.

6. Guyatt GH, Oxman AD, Sultan S, Glasziou P, Akl EA, Alonso-Coello P, et al. GRADE guidelines: 9. Rating up the quality of evidence.J Clin Epidemiol 2011.

7. Falck-Ytter Y, Francis CW, Johanson NA, Curley C, Dahl OE, Schulman S, et al. Prevention of VTE in orthopedic surgery patients: Antithrombotic Therapy and Prevention of Thrombosis, 9th ed: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines. Chest 2012;141(2 Suppl):e278S-325S.